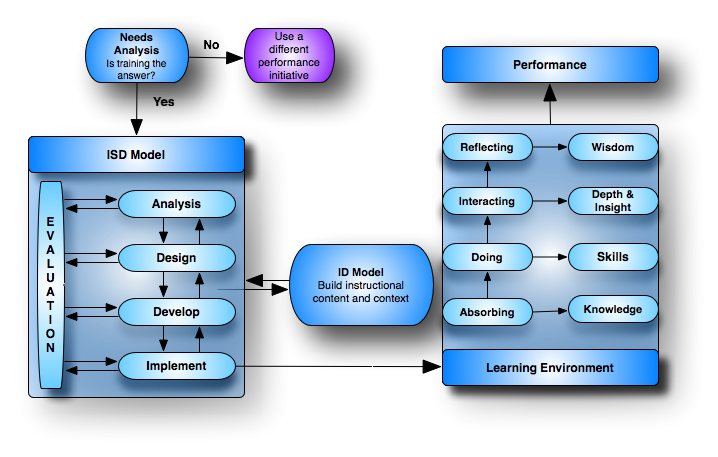

Clark Quinn has an informative post where he discusses the need for Performance Analysis, Learner Experience Design, and ADDIE. I think I have it somewhat summed up in this chart I created for my web site (note that clicking the chart will bring up a larger image that has clickable links):

Performance Design Concept Map

Click chart for a larger image & a clickable map that will take you to the various parts

Performance

ADDIE and other ISD and ID models were never designed to to discover performance problems, thus when confronted with such problems you need to discover the actual cause. Note the some managers will present every performance problem as a “training” problem, which means you need to ensure the problem is training related or requires some other performance solution.

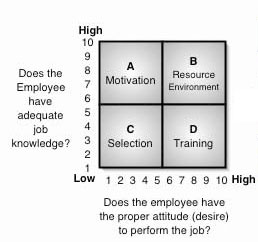

The Performance Analysis Quadrant (PAQ) is a tool to help in the identification. By discovering the answer to two questions, “Does the employee have adequate job knowledge?” and “does the employee have the proper attitude (desire) to perform the job?” and assigning a numerical rating between 1 and 10 for each answer, will place the employee in 1 of 4 performance quadrants:

Performance Analysis Quadrant (PAQ)

- Quadrant A (Motivation): If the employee has sufficient job knowledge but has an improper attitude, this may be classed as motivational problem. The consequences (rewards) of the person's behavior will have to be adjusted. This is not always bad as the employee just might not realize the consequence of his or her actions.

- Quadrant B (Resource/Process/Environment): If the employee has both job knowledge and a favorable attitude, but performance is unsatisfactory, then the problem may be out of control of the employee. i.e. lack of resources or time, task needs process improvement, the work station is not ergonomically designed, etc.

- Quadrant C (Selection): If the employee lacks both job knowledge and a favorable attitude, that person may be improperly placed in the position. This may imply a problem with employee selection or promotion, and suggest that a transfer or discharge be considered.

- Quadrant D (Training and or Coaching): If the employee desires to perform, but lacks the requisite job knowledge or skills, then some type of learning solution is required, such as training or coaching.

Learner Experience Design

Not only must you always use a tool, such as the one above to discover the true solution to a performance problem, ADDIE should almost never be used as a stand-alone solution. Being part of the ISD family, it is very broad in nature, thus it does not go into a lot of details. You need to use it as a plug and play solution — while Clark wrote of Learner Experience Design, you can add any additional components to it on an as-needed, such as Action Mapping, 4C/ID, and Prototyping.

ADDIE

Clark noted in his post that one of ADDIE's failures was being a waterfall method, but as I noted in a past post, ADDIE evolved into a dynamic method in the mid-eighties. ADDIE does make a good checklist; however, use it wisely. If you blindly follow it, then it is nothing more than a process model. However, if you use it in a more creative fashion, then it becomes a true ISD model that enhances the design of the learning process.

12 comments:

Donald, I agree that ADDIE has evolved, and that it is particularly focused on courses, so would need 'wrapping' to address other needs. I note that your PAQ has essentially the same structure as the situational leadership model (with different prescriptions). Lovely synergy.

Hi Clark,

I agree that it needs much better wrapping to address other needs. However, having been formally trained in ISD, I can say somewhat in its defense that one of the first things they pound into your head is that when "Selecting the Instructional Setting" within the Analysis Phase is to select the simplist method if at all possible since classroom courses are the most difficult and expensive means. For example:

1. Select a performance/job aid if at all possible

2. If not, then select on-the-job training

3. If that is not feasable, then select self-paced training before lockstep training.

As far as the PAQ, I wish I could take credit for it but that concept has been arould forever (long befre the web or the Situational Leadership Model). I'm not sure who came up with it - it might be one of those concepts that spead so fast that it might be hard to identify.

I think there is something implied but not often articulated to folks that consume these frameworks. That is that a solution class isn't an either or proposition.

I've seen many needs analysis that end in binary forks (the problem will either be solved with training or it won't.) This may be for the convenience of the stakeholder, who is participating in the needs analysis to check a box and get their training.

In my opinion, this binary forking creates a terribly simplified solutions view.

Performance solutions are rarely an either / or, simple proposition. Good designers will recognize this. It doesn't help that most of the stuff I've seen that defines models and frameworks points to a Y in the road.

There's plenty of integration potential between classes and types of solution. Examining a task or subtask on it's own merit, establishing solution probabilities from a wide range of solution classes (including 'not solving it at all'), and evaluating at a level that enables isolation is the practice of a good performance problem solver (designer.)

I think that this binary mindset is part of the problem with the failure in application of the vast toolsets available to problem solvers. It is a failure in application. Not a failure of the toolsets themselves.

We argue about the validity of stuff like Bloom's Taxonomy and ADDIE - neither of which are prescriptive nor displace the real tools of the designer... Those tools that rest on the neck of the problem solver.

To illustrate this point, I'll use the select one conditional example in Don's comment:

1. Select a performance/job aid if at all possible

2. If not, then select on-the-job training

3. If that is not feasable, then select self-paced training before lockstep training.

****

I'd like to start seeing more folks look at these as layers of consideration. Something more like this...

If the needs assessment or subsequent analysis identifies K&S contributes to the performance gap:

1. Select a performance / job aid if this will address the problem.

2. Carry the job aid forward and support on-the-job training for elements that the job aid does not fully support.

3. If on-the-job training supported by the job aid cannot fully support the performance need, augment with self-paced training or other training opportunities that do not require travel / co-location. Carry appropriate elements forward from other layers.

4. If any of the above in combination cannot solve the problem alone, extend them as necessary with the appropriate resident or face-to-face training solution. Leverage other layers as appropriate. Don't leave performance support out of the classroom!

I'm confident that Don and Clark are down with the concept of layering solutions. I'm not sure that most of the field sees things the same way.

Performance support tools are magical, in my opinion. No other class of skill support solution has the power to speed time to performance, augment recall, or support skill building through independant practice as well as a well designed performance support package!

Apologies for multiple comments:) This is something I'm pretty passionate about.

I built this up last year, based on a solution deployment integration model I sketched up several years ago and amplified / inspired by some things Clark Quinn has been talking about. This illustrates the layered solution framework / ecosystem model in another way.

http://www.xpconcept.com/ecosystem.pdf

Steve, thanks for the comments as I quite agree with you.

Cheers,

Don

Steve, I think 'layering' / 'ecosystem' ideas are spot on.

The only thing I'd add is a little suggestion (and it's not the first time I've said this, I know) about the main cause of the ADDIE/non-ADDIE battle/debate/proxy war.

The people I speak to who are in the 'anti-ADDIE camp' are using formal-ISD-processes as a metonym for a feeling that many modern 'training' problems won't yield to the A of analysis.

So - and this is what I think rather than what other people have said - the PAQ is great. And ADDIE is a useful starting point/framework for shared communication. But they're limited.

I'm not talking about some mystical epistemological 'our route to performance improvement is unknowable' nonsense (of the sort that you get from some of the more enjoyable but less useful #KM people). But rather that the A in ADDIE is uneconomic in the face of rapid iteration.

It's not that ADDIE lacks validity but that it's an unnecessary transaction cost.

There's a fundamental myth about training AND education that we're seeking to provide the best 'learning experience' we can. I think this is naive - we're aiming to deliver the cheapest and least-bad for a lot of the time.

Formal ISD processes are often very expensive.

Simon,

I'd agree with you on the cost effectiveness of analysis as an entry filter. I've been trying to adjust our rigorous entry processes. In my organization, we're overcome by a layer of analysis for new performance planning or diagnosis that uses the Harless system.

It's not that this system is terrible - other than it seems to focus on the connections between behavior output and accomplishment and seems to ignore important elements of the performance ontology like cognitive tasks. But I've seen far too many 500 page study reports that go nowhere, that have no impact. Don't get me wrong, there's great stuff in those reports (if you can wade through it.) But the energy drained out of the effort long ago. Success is, in large part, dependent on energy management.

My view of (A) is that we're looking for a level of probable confidence. Confidence that we've found the problem and identified most (never gonna find em all) of the performance details associated with the problem. Confidence that the solution(s) we've identified have a high probability of solving the problem.

I've been trying to convince my analysis brethren that by taking strong postulates and implementing or eliminating them we can quickly reach satisfactory solutions much faster, and probably more accurately, than the drawn out process of analysis.

I like to use health diagnosis as a metaphor. If someone comes in complaining about foot pain, they're probably right. Why would you order a full diagnostic (stool sample, body scan, hearing test) to ensure you've caught all of the possible symptoms. By the time you're done with the diagnostic, the patient will have probably solved their own problem.

By starting with a 50%+ probability that the customer / stakeholder is right when they answer this question:

* What do you think the problem is?

And adjusting the probability by continuing to explore with questioning or experimentation -- you'll quickly reach a level of confidence in a probability that is good enough. You've either found the answer or you haven't, evaluation is the only way to know. It's the same whether you've gone through a long analysis or rapidly punched through to strong postulates early on.

You can't get anywhere near 100% confidence without an evaluation. Good enough may not provide a pre-solution confidence score as a rigorous analysis, but it might just be close enough. And if all the energy drained out of the effort while you were focused on filling boxes with stuff, the confidence level won't matter anyway.

Cheers,

Steve

The way I have always pictured ADDIE is that it is one of the models that every instructional designer needs to know how to use; however, just like any other model, we don't need to follow every step within it for every project we do. Again, if we just blindly follow it, then it cheapens it to a process model.

Thus I'm in agreement with both Simon and Steve that shops that blindly follow it are nothing more than ISD shops, while shops that use it as a helpful guide, rather than a rule, are true performance improvement shops.

This is especially true when we need to produce faster through iterative approaches - just good enough to meet our customers’ needs for the first round, and then improve it as needed.

The second comment on this page describes an interesting way of using ADDIE in a mindmap: http://mindmappingsoftwareblog.com/how-do-you-use-mind-mapping-software-for-projects/

Has anyone tried something similar? It might help to take it from less of a process to more of a brainstorming session.

Don,

We've used MindManager (I love to break it out when I can to capture and organize the ambiguous) for performance improvement projects. I've also used it to rough out some design and communication strategies.

It does a great job mapping performance ontologies. It's easy to build up a task map by accomplishment. Gives lots of options for task attributes and other related data if you have it (cog task data).

SME's seem to go through a cycle of "huh" to delight as a map comes together during brainstorming sessions. I advocate capturing things initially in an unorganized 1-dimensional way, then moving to create the hierarchies. The tool makes it easy to restructure as clarity sets in:

"Ah, I've never really thought of all of these things at once - those two tasks are really closely related"

I find it's more helpful for driving exploration and group questioning than actual capture and display as an artifact.

We use ThinkTank (GroupSystems) as well, which is a welcome accelerator for gaining concurrence and picture clarity. I'd imagine these tools could be pretty powerful in combination, though I've never attempted to leverage them in this way.

On the subject of Mindmapping - here's an interesting article on using the process / approach for creative communication design. Not too far off of the goals of performance solution:

Using mind maps to provide creative direction

Post a Comment